Lesson 1: Introduction to Data Quality

Key terms

- Data quality: an assessment of a body of data based on its contents and intended use.

- Dimension: a broad characteristic of a collection of data, in terms of quality.

- Attribute: a detailed metric for measuring a dimension of data quality.

- Business process: a set of actions used to complete a specific operational task for an agency or system, such as the intake procedure for a person entering custody in a correctional facility.

- Centralized database: a collection of data that is stored and maintained in one location, rather than as local copies on individual computers.

- Data analyst: a type of data user who primarily processes and manipulates data to summarize and generate insights for other data users.

- Data collection: the process of systematically gathering and recording information.

- Data-driven decision-making: the process of making decisions based on objective, qualifiable evidence instead of intuition or personal experiences.

- Data manipulation: the process of organizing, structuring, aggregating, or otherwise modifying data to gain insights into the information it contains. This is not the process of faking data or “massaging” it to produce a particular finding.

- Data user: an individual or system that interacts with or uses data as a function of their work. Data users might need to access, analyze, interpret, or manipulate data to perform their job duties or fulfill their roles (e.g., a corrections analyst who uses data to generate reports that inform policymakers).

- Metadata: a set of information that describes a collection of data, including file format, variable definitions, when records were created and by whom, etc. Metadata does not include the actual contents of the data (i.e., specific values or entries).

- Stakeholder: a type of data user who primarily consumes data but does not typically create or manipulate it. Stakeholders often rely on data that has already been processed or interpreted to make informed decisions, as they may not have the skills or tools to analyze the data themselves (e.g., executives who rely on reports to make strategic decisions or members of the public who use data provided in dashboards).

- Quality assurance: a set of standards and practices that ensure the reliability, consistency, and accuracy of data and analyses through predetermined review and validation checks.

What is data quality?

The phrase “data quality” can mean different things to different people; there is no one concise and agreed-upon definition. Essentially, data quality refers to a collection of characteristics about the data, its intended use, and who is using it. What counts as “quality” data varies depending on the subject and context of the data, but generally, quality data is reliable, unbiased, relevant, unambiguous, and secure. The specific dimensions of data quality will be covered in more depth in subsequent sections of this course.

You may have heard the expression “garbage in, garbage out”—in other words, bad data gives bad results. Or, to put it another way, the quality of your analysis depends on the quality of your data. For example, say you want to determine the average age of people in a dataset based on a variable called “age.” However, due to data entry errors, this variable sometimes contains a person’s shoe size rather than their age. Even if you calculate the average correctly, the result is still incorrect because it isn’t the average age, it’s the average of some ages and some shoe sizes.

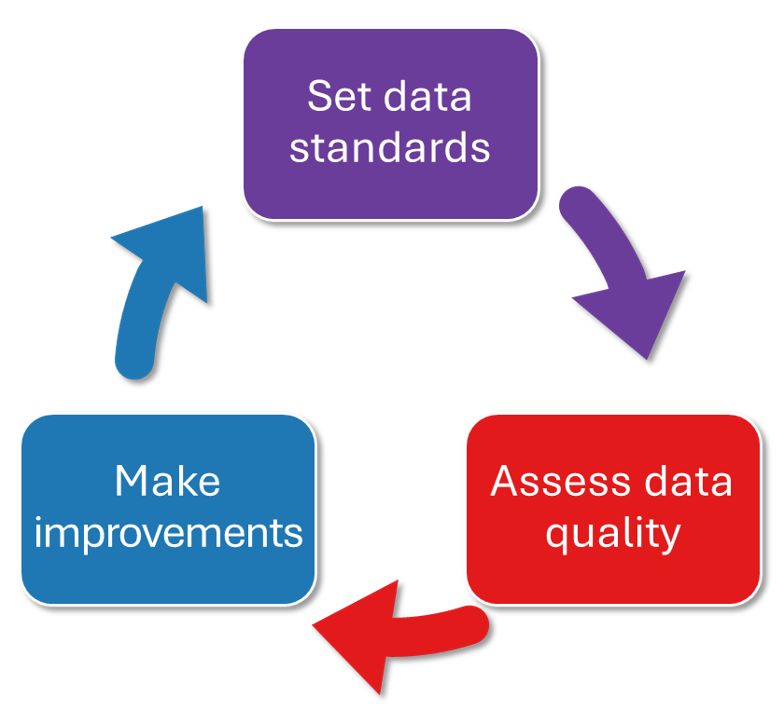

As shown in Figure 1 below, data quality is a continuous process, not a one-time check. Because your agency’s data is always changing—records are being added or updated daily—it is imperative to regularly conduct data quality checks and adjust based on what is found. Does the data documentation need to be updated? Do staff need additional training in data entry? Have business processes changed in a way that impacts the data? Monitoring changes to data quality will help catch data problems before they become data catastrophes.

Why is data quality important?

Assessing the quality of your data can provide valuable information about how the data may be used, interpreted, and communicated.

High-quality data plays a critical role in supporting both day-to-day operations and long-range strategic planning. It allows agencies to make better-informed decisions, such as population management and resource allocation, while low-quality data can lead to flawed decision-making, which ripples across a system. For instance, low-quality data on housing for incarcerated people may lead to overcrowding and undermine an agency’s ability to accommodate the needs of the population.

The quality of your data also impacts your ability to build and maintain trust. High-quality data is necessary for accountability and transparency, providing stakeholders, including oversight bodies and elected officials, with information to assess the services and operations of a given agency. Stakeholders must trust that the data is accurate to make informed decisions; they may understandably lose confidence in low-quality data, which could eventually lead them to stop using data to guide their decisions.

As a data analyst, you might not have control over some aspects of data quality, such as the type of data management system(s) used by your agency, what is required from operations people when they enter data, or where data files are stored. However, understanding how those decisions impact the quality of the data is crucial. To produce reliable and accurate analysis, you need reliable and accurate data.

For example, say the data system your agency uses doesn’t have a dedicated field for recording program completion dates, so that information gets entered in the “officer notes” field instead. This field also contains other miscellaneous case information, which makes analyzing program completion rates challenging. Being able to explain to agency leadership how this workaround impacts reporting could lead them to negotiate with the vendor to add this field.

Dimensions of data quality

Data quality can be broken out into four dimensions, each with distinct measurable attributes.1

| Dimension | Definition | Attributes |

|---|---|---|

| Intrinsic | The data is accurate, reliable, credible, and impartial. | Accuracy Believability Reputation Objectivity |

| Contextual | The data meets the needs of a particular use case. | Relevance Completeness Timeliness |

| Representational | The data is concise, consistent, interpretable, and easy to understand. | Understandability Interpretability Consistency |

| Accessibility | The data is available and easy to access and use. | Accessibility Access Security |

Intrinsic Data Quality

This dimension of data quality refers to the characteristics of the data that make it accurate, reliable, credible, and impartial. For your agency, maintaining high intrinsic data quality is important to ensure trust and support decision-making using your administrative data.

Contextual Data Quality

This dimension of data quality focuses on data being appropriate for its intended use. This dimension involves different considerations depending on who is using the data and what it’s used for. Maintaining contextual data quality is important to ensure that data are being used for appropriate analyses.

Representational Data Quality

This dimension of data quality focuses on the understandability, interpretability, and consistency of data. Representational data quality is important to ensure that data users can understand the nuances and limitations of the data to make educated claims. Maintaining representational data quality is important for your agency to ensure appropriate claims are made using their administrative data.

Accessibility Data Quality

This dimension focuses on the extent to which data are available and the ease with which users can access them. While administrators make data technically accessible, they must make other considerations like how easily users can manipulate the data and access it. Maintaining access to data is important for your agency to protect sensitive data and ensure that data are securely accessible for any analyses.

While most of these dimensions relate specifically to the needs, perspectives, and tasks of a data analyst, they also allow for the needs and perspectives of other data stakeholders. The rest of this course will cover each data quality dimension and its attributes in more detail, including the best practices associated with each data quality dimension. This course will end with a data quality assessment that you can employ within your agency to understand your data and ensure that you are maintaining high-quality data.

Who is responsible for data quality?

Not only is data quality a cyclical process that involves continuous evaluation, but it also involves engagement at all levels of an organization. While everyone is responsible for data quality, it is the DOC secretary/commissioner and IT director, along with the research director, who are responsible for ensuring that organizational resources are devoted to maintaining data quality. This would involve the following:

- Establishing clear data governance policies and procedures including policies for clear data documentation and standardizing the data entry processes

- Developing training standards and policies for staff to engage with the data system and the data entry process

- Investing in auditing data entry on the front end of the system

- Establishing and maintaining data collection standards and procedures that include periodic data quality checks

- Ensuring compliance with regulatory requirements and data safety standards

- Establishing clear audit and accountability policies including verification checks, error reporting, correction processes, and data archiving procedures

In the upcoming lessons, you will be learning about the key dimensions of data quality, which will provide you with a deeper understanding of its foundational components. These lessons are designed to be both theoretical and insightful, promoting a strong grasp of data quality principles.

Making large system-wide improvements can take time and require decisions that are outside your control as a corrections analyst. But there may be some practices that you can begin to integrate into your work in the meantime. After going through this course, you will be better suited to identify what your data can and cannot do and which questions it can answer. With this deep understanding of data quality, you will be prepared to respond to data requests, being transparent about what you can answer with the data maintained by your agency and what you will need better data to answer. This process can help position your agency to implement the strategies outlined in this course, while clearly demonstrating how enhancing data quality will benefit the agency and improve the range of questions it can address.

Yang Lee et al., “AIMQ : A Methodology for Information Quality Assessment,” Information and Management 40, no. 2 (2002): 133–146; Agung Wahyudi, George Kuk, and Marijn Janssen, “A Process Pattern Model for Tackling and Improving Big Data Quality,” Information Systems Frontiers 20, no. 3 (2018): 457–469, https://doi.org/10.1007/s10796-017-9822-7; Richard Y. Wang and Diane M. Strong, “Beyond Accuracy: What Data Quality Means to Data Consumers,” Journal of Management Information Systems 12, no. 4 (1996): 5–33, http://www.jstor.org/stable/40398176; Hongwei Zhu et al., “Data and Information Quality Research: Its Evolution and Future” in Computing Handbook, 3rd ed, ed. Heikki Topi and Allen Tucker (Chapman and Hall/CRC, 2014).↩︎