Lesson 4: Contextual Data Quality

Contextual data quality focuses on the appropriateness of data for use within a specific context or setting. This dimension of data quality emphasizes that it’s not necessarily absolute, but relative to how, by whom, and for what the data will be used. Consider the example below of how contextual data quality regarding education program enrollment data for incarcerated people may change depending on who is using the data and what might be most relevant to them:

A program administrator might prioritize timely, detailed information that includes age, education level, and program metrics (hours enrolled, progress in course).

As an analyst, you might be asked to provide aggregate statistics on participation rates, completion rates, and program outcomes to inform program evaluations in your state. Thus, you might be more interested in the data’s ability to be used for this aggregate analysis rather than specific individual-level details.

A legislator may be more interested in trends related to program goals, which could inform funding decisions focused on long-term outcomes such as lower recidivism rates or lower rates of prison misconduct.

Contextual data quality is important because it ensures that data is aligned with the needs of the DOC. If the data is not suitable for the needs of different stakeholders such as program administrators, prison staff, analysts, and policymakers, it can lead to misinformed decisions. Thus, maintaining contextual data quality is important for the following reasons:

Appropriateness for use case: Data must be suitable for the specific purpose it’s being used for. For example, data used for policy decisions may require aggregate statistics, while data for operations decisions may need to be more detailed and updated in real time.

Meeting user needs: Different stakeholders might have different data needs and priorities for their purposes. For example, as an analyst, you might prioritize completeness and accuracy. The DOC director might prioritize clarity and the ability to meet reporting requirements. A corrections officer or prison administrator may prioritize real-time updates and accuracy.

Efficiency: The quality of a dataset depends on its intended use. If it’s not structured to support required analyses, it can lead to misinterpretation, rework, and delays in decision-making. When data does not align with user needs, it will require additional work to prepare it for analyses (e.g., cleaning, data transformation), reducing efficiency. Ensuring that data is appropriate and usable for all stakeholders enhances organizational efficiency and effectiveness.

Once data is collected and recorded, it’s very difficult to change the quality of the entries. Thus, contextual data quality relies heavily on the previous dimension of intrinsic data quality. This is why maintaining high intrinsic data quality (as you learned in the previous lesson) is an important first step. If data are entered and maintained to the highest standards of quality, then you can focus on contextual data quality to ensure the data are being used in the appropriate way and are relevant to the DOC’s needs.

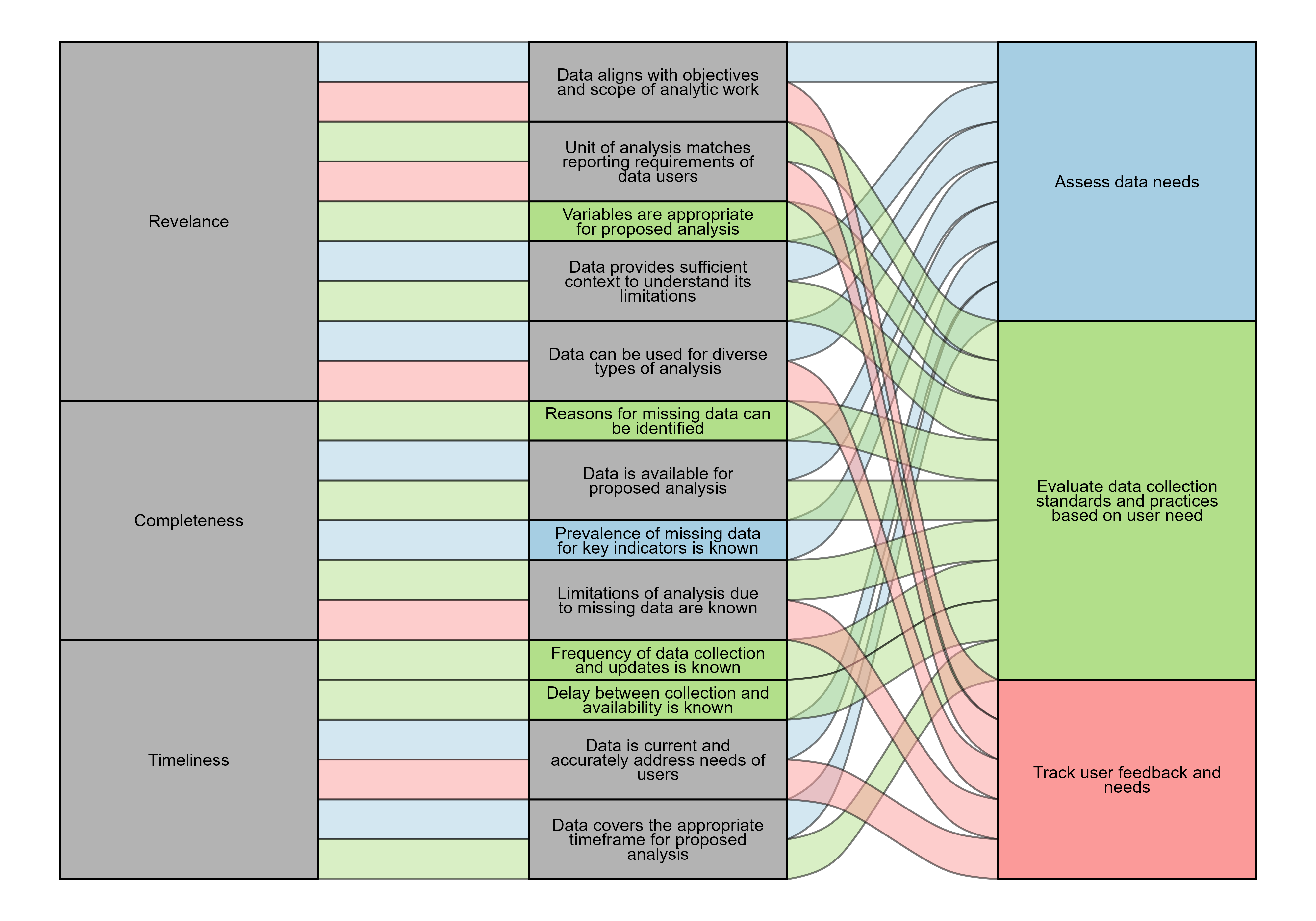

Contextual data quality has three attributes: relevance, completeness, and timeliness.

| Quality Attribute | Definition | Example |

|---|---|---|

| Relevance | The extent to which data are applicable and helpful for the task at hand. | An analysis focused on participation in programming requires data to be collected on participation. |

| Completeness | The extent to which data are of sufficient breadth, depth, and scope for the task at hand. | Analyses focused on racial and ethnic disparities need complete data on racial and ethnic identity. For example, if the analysis requires comparisons between White, Black, and Hispanic individuals, you will need to have data that identifies race and ethnicity separately. |

| Timeliness | The extent to which the period reflected in the data is appropriate for the task at hand. | An analysis of the current population requires data that is up to date. |

The following sections discuss each attribute in more detail and guide you through important considerations when assessing contextual data quality. This is followed by a list of best practices that you and your agency can implement to ensure that the highest standards of contextual data quality are met for all users. Unlike intrinsic data quality, some of these practices may be more in your control as an analyst in situations when you’ll make decisions about whether the data is appropriate for the analysis that you’re conducting and the questions you’re asked to answer by leadership. However, assessing the appropriateness of the data for use by others (e.g., program administrators, DOC staff) may not be in your control, which is why consulting and assessing data needs for different users will be important.

Relevance

Definition: The extent to which data are applicable and helpful for the task at hand.

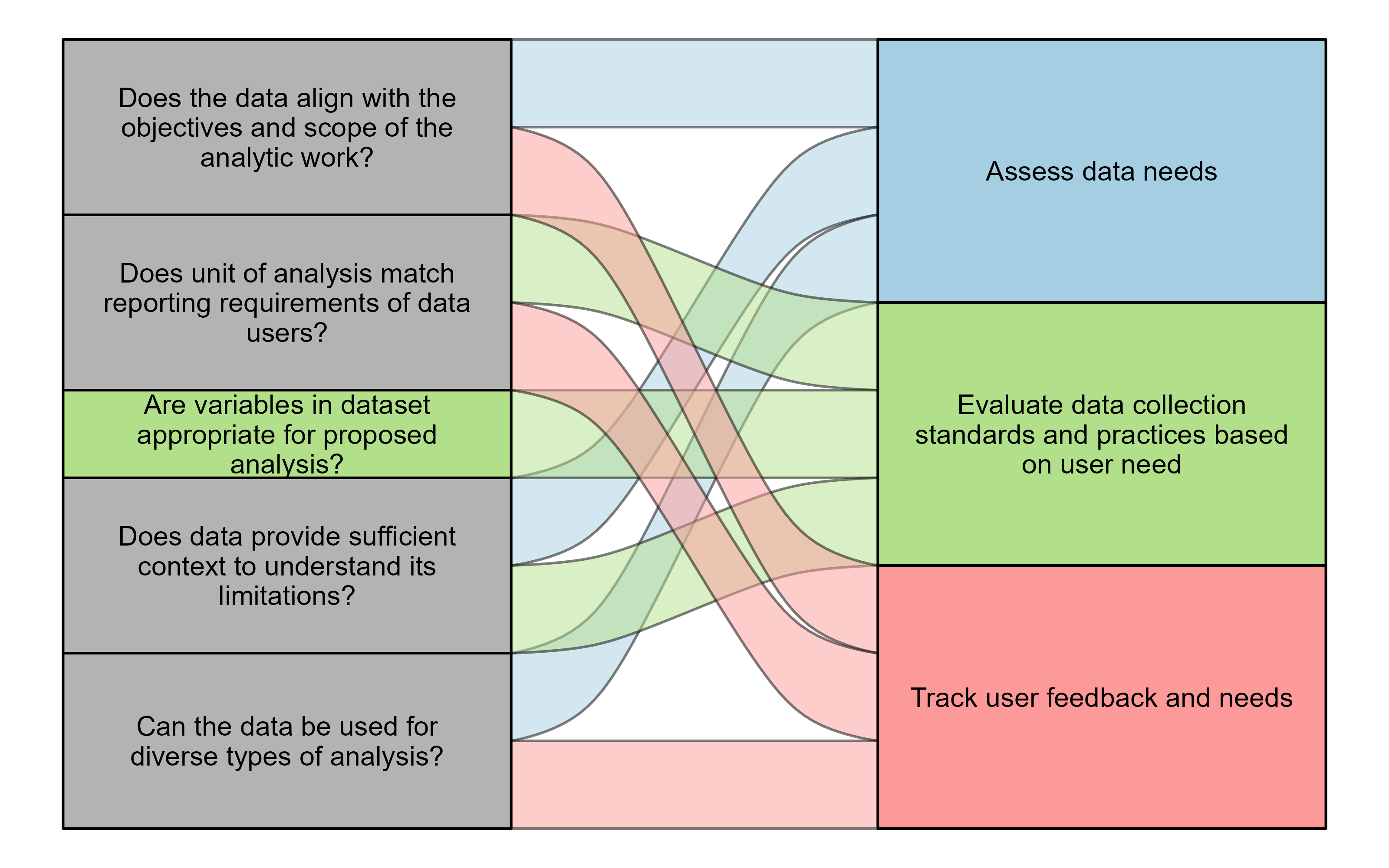

Relevance is a key component of contextual data quality that focuses on making sure that the data used is applicable and appropriate for the specific task, analysis, or decision-making process at hand. Relevance is subjective and depends on the perspectives of the user or stakeholder. It’s important to remember that data that’s relevant for one analysis may not be appropriate for another. Data that’s relevant to a service provider entering or reviewing data may be different from the data that’s relevant to an analyst conducting specific analyses, which may be different from what’s relevant to the DOC director making strategic decisions. The relevance of the data should be regularly assessed and validated to make sure that any conclusions based on the data are meaningful and actionable.

Assessing and ensuring the relevance of data requires knowing, understanding, and meeting the needs of the data user. This process may include observing the operational processes related to data collection and entry, learning the needs of different data users, understanding the reporting requirements that rely on the data, and reflecting on the types of research questions or analyses that are requested. Assessing relevance may also rely on examining the metadata to understand where data originated, how it was entered, and how it has been updated. Use the following questions as a guide to assess relevance across a broad range of users and data needs:

- Does the data align with the objectives and scope of the analytic work?

- Does the unit of analysis match the reporting requirements of the data users (e.g., is data recorded at the individual level while most of the reporting requirements call for facility-level aggregates)?

- Are the variables in the dataset appropriate for the proposed analysis?

- Does the data provide sufficient context to understand its limitations?

- Can the data be used for diverse types of analysis (e.g., tracking individuals, aggregate statistics, program participation)?

Example

Imagine a scenario where you need to calculate an accurate count of the number of incarcerated people who have a severe mental illness (SMI). Unfortunately, the state did not begin collecting this information in its data system until two years ago and has not been able to enter the information for everyone in the state’s corrections system. A colleague proposes that you examine the number of people who are taking psychotropic medications and use this to estimate the number of people who have an SMI. While this is a good proxy, it will omit people who have an SMI but are not receiving medication. You must assess the relevance of using medication as a proxy for SMI, and if you decide to use this measure, you must determine what conclusions you can make based on your analysis. It’s likely that because you’re missing a subset of people with an SMI, your analysis will be limited, and you will undercount people who are incarcerated and have an SMI.

Completeness

Definition: The extent to which data are of sufficient breadth, depth, and scope for the task at hand.

Completeness is the degree to which all necessary information is present and available within a dataset. It’s one of the most tracked data quality attributes. If certain data points are missing, your analysis may reflect the characteristics of the available data rather than the larger population you’re studying. This may suggest patterns that don’t truly reflect reality. In the example above, you had an incomplete dataset (an SMI tag), and you were deciding on the relevance of using a different dataset—receiving psychotropic medications—as a proxy. Using this proxy, you may undercount the number of people with mental illness because you exclude those who aren’t receiving medication. This would be a poor reflection of the reality of SMI among the population.

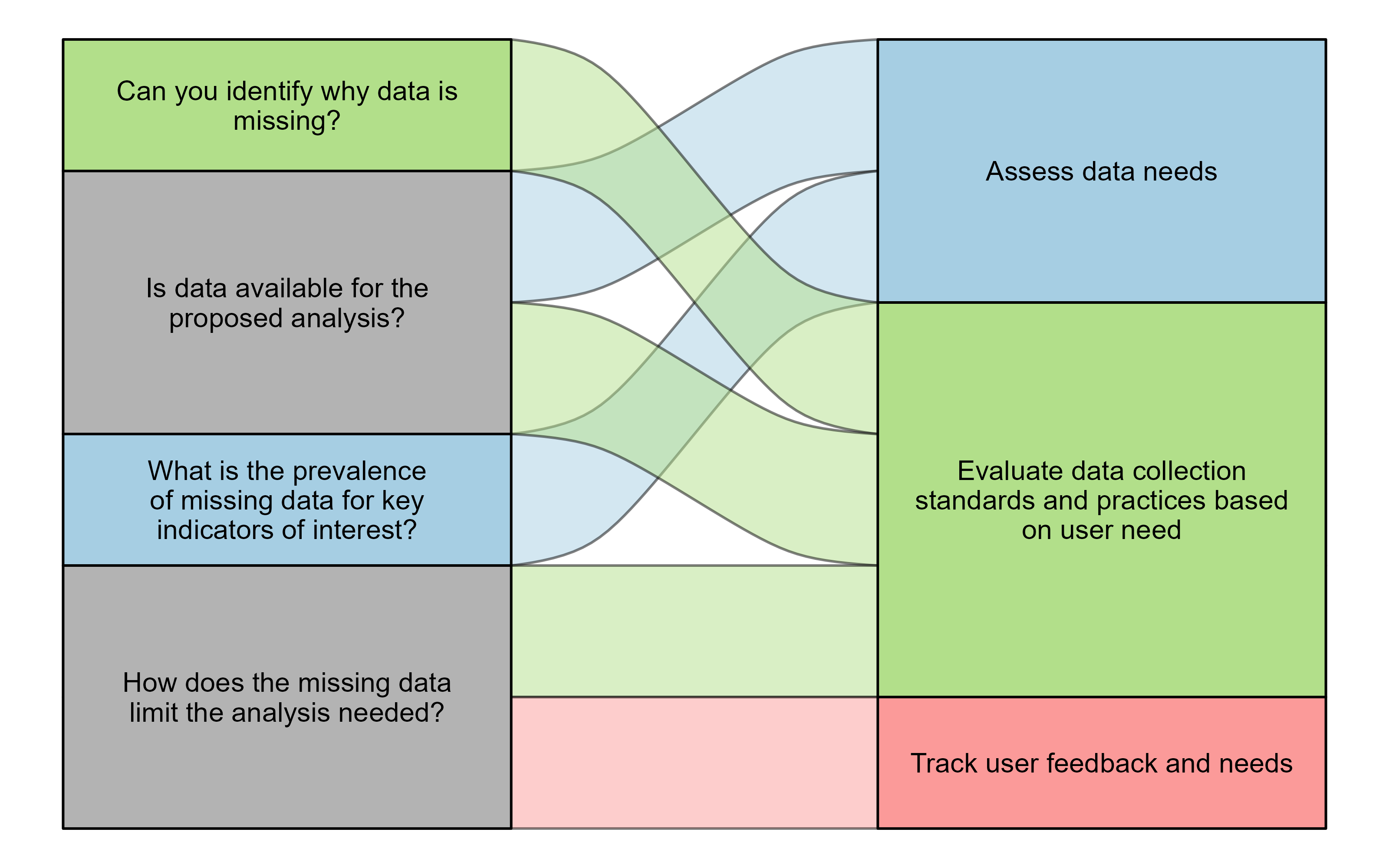

Ensuring completeness involves an ongoing effort to collect, maintain, and use comprehensive data to support a variety of operational, analytical, and decision-making purposes within a DOC (or any organization). This process is supported by implementing the best practices explained in the prior lesson on intrinsic data quality. However, completeness as an attribute of contextual data quality focuses on assessing which data fields are present or missing for the specific analysis. You can consider the following prompts when assessing completeness:

- Is data available for the proposed analysis?

- Can you identify why data is missing (e.g., data entry error, data not collected)?

- What is the prevalence of missing data for key indicators of interest?

- How does the missing data limit the analysis needed?

Example

Each year, federal reporting rules require collecting information on the number of people who remain in prison past their parole eligibility. Imagine that your agency cannot provide this information because the parole eligibility date is missing for one-third of people who could be released on parole. It turns out that this date is listed in the files the DOC received from courts but did not make it into the data management system for some reason. To address this issue, you work with leadership to create a list of data elements that must be entered at intake and begin the process of requesting a change in the management system to make this a required field.

Timeliness

Definition: The extent to which the age of the data is appropriate for the task at hand.

Timeliness is a critical attribute of contextual data quality and emphasizes the importance of data being current and relevant when it’s needed for decision-making, analysis, or operational use. Ensuring the timeliness of data allows decisions to be based on current information rather than outdated data.

It’s important to understand that there is no universal agreement or standard for what is considered up-to-date or real-time data. Your data system may capture data at different points in time, and your agency should have a policy that identifies the necessary frequency of data updates that is maintained through organizational standards as explained in the previous lesson. However, assessing contextual data quality goes beyond the questions and best practices related to intrinsic data quality. While data may be entered into your system in a timely manner, that does not necessarily mean the data is timely for the analyses you’re conducting. Contextual data quality requires assessing whether the data is timely for the current use. When you encounter a situation where the data is not appropriate for your analysis, you’ll need to determine the best use of the data for your purposes while acknowledging its limitations.

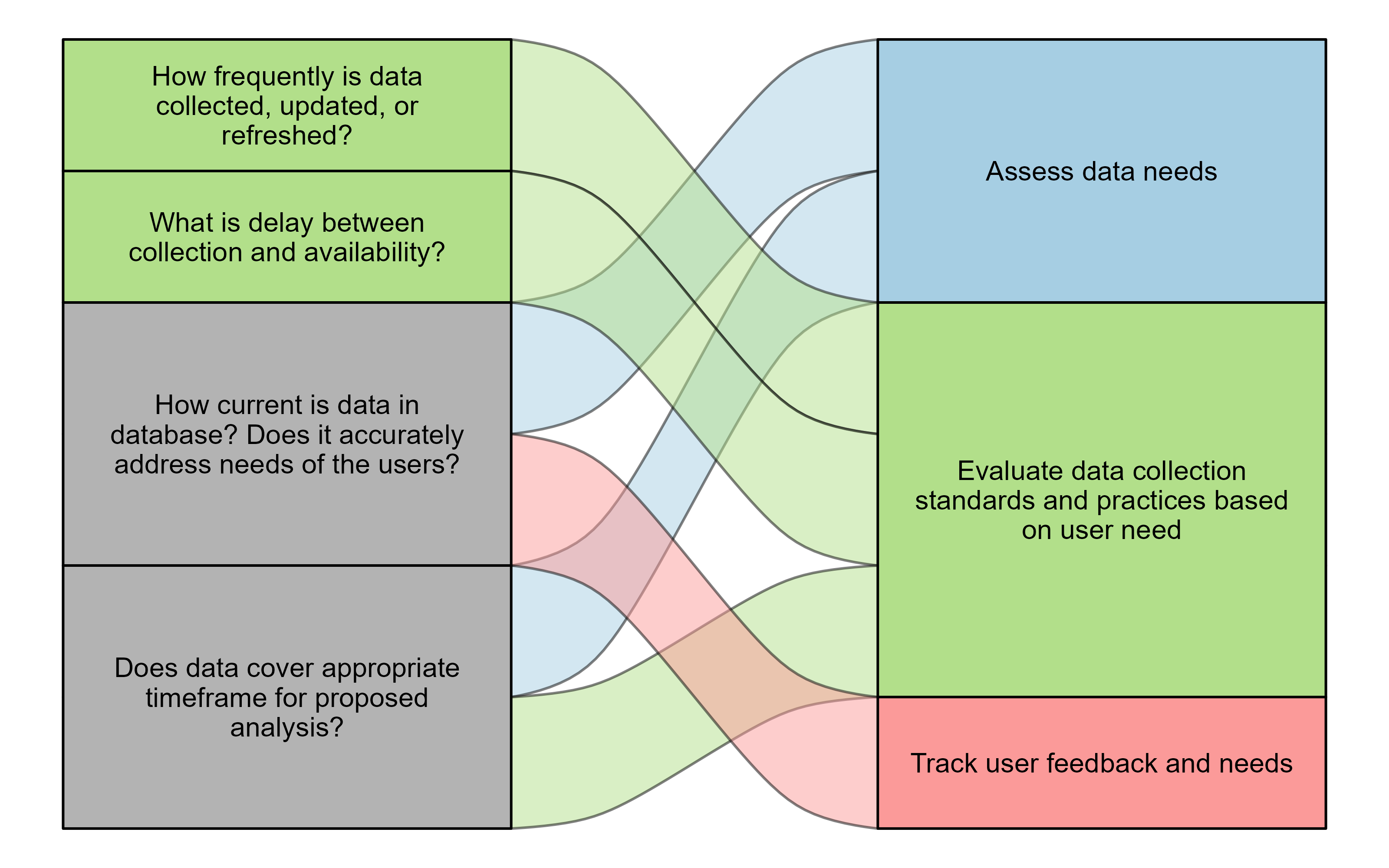

In evaluating the timeliness attribute of data quality, administrators and users should consider the following questions:

- How frequently is the data collected, updated, or refreshed?

- How long is the delay between collection and availability?

- How current is the data in a database? Does it accurately address the needs of users?

- Does the data cover the appropriate timeframe for the proposed analysis?

Example

Imagine your state is in the middle of its legislative session, and legislators are curious about the number of people enrolled in a specific corrections program over the last five fiscal years. You identify the necessary data for this request but notice that program enrollment for this fiscal year is much lower than prior years. To learn more about what may be occurring this year, you call the lead administrative/data entry staffer at the state’s largest facility. You learn that the data for programming is entered into the data system after each session has concluded. Final data for this fiscal year will not be available for at least another two weeks when the program ends. Given the urgency of this data request, you note that data collection is not complete for the fiscal year and either (1) project program enrollment for this fiscal year based on trends or (2) decide to provide a five-year trend that does not include this fiscal year.

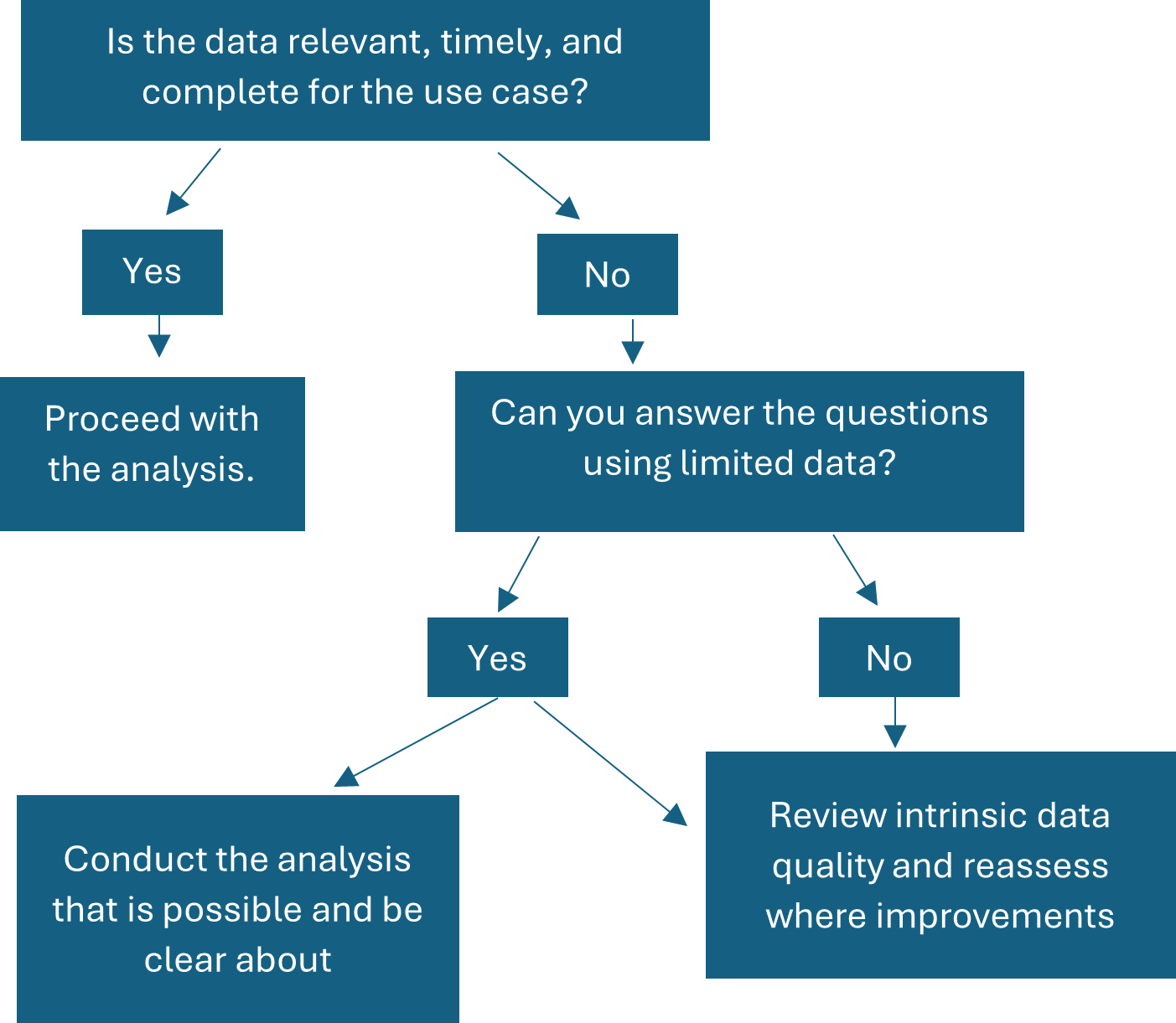

Assessing contextual data quality

Data that maintains high contextual data quality is relevant, complete, and timely for the task at hand. If your data meets these standards for your intended use, then it’s considered to have “high” contextual data quality. You’ll likely encounter situations where data does not meet the standards set by this dimension, but you still need to answer questions for stakeholders or meet reporting requirements. For a short-term solution, you might decide to use the available data to answer the questions (e.g., by using a proxy). In this case, you should weigh possible options and make the decision that best fits your needs and suits the analysis you need to conduct while acknowledging limitations. In the long term, you may want to work with your leadership to implement the best practices of intrinsic data quality that would help ensure high contextual data quality for similar analyses in the future.

Best practices

Maintaining high contextual data quality requires regular efforts to keep data updated and relevant for all users. Implementing best practices can help proactively enhance contextual data quality while addressing the diverse needs of users and ensuring those needs are effectively met.

The section below highlights these best practices, the attributes they are associated with, and the steps you (and your agency) can take to maintain these best practices and maintain high contextual data quality. Some of these will overlap with the best practices you learned in the previous lessons, as these dimensions of data quality build on and overlap with one another.

| Practice | Attribute |

|---|---|

| Assess data needs. | Relevance, Completeness, Timeliness |

| Evaluate data collection standards and practices based on user need. | Relevance, Completeness, Timeliness |

| Track user feedback and needs. | Relevance, Completeness, Timeliness |

Assess data needs

Assess data requirements for the variety of operational needs and research applications that pertain to your agency. In this process, align needs with internal and external requirements ensuring that data is relevant to internal users and external stakeholders. Provide data in context to allow users to understand how it’s related to their needs and concerns. Specifically, do this by conducting a needs assessment to understand the user population both inside and outside the organization.

Evaluate data collection standards and practices based on user need

This practice may seem similar to what is outlined under intrinsic data quality, but for contextual data quality pay particular attention to the elements of completeness and timeliness of the data for the current analysis. Suggest updates to data collection processes to ensure that data are captured in a way that’s consistent with internal policies, external standards, and user needs. Recommend the frequency of updates and quality assurance to ensure complete and timely data. Interview staff to understand what they think a variable means when they enter it and how they use variables operationally; it may be different from what analysts assume.

Track user feedback and needs

Build formal mechanisms for users to provide feedback to continuously ensure that data is relevant and useful. Allow users to share their unique data needs. Also, track changes in needs over time. A systematic process for tracking needs will allow you and your agency to understand what changes may be necessary to fulfill needs and identify areas for improvement in the data system and data management policies.

These three best practices are designed to help you, and your agency, maintain high contextual data quality and ensure that the needs of all users are met and consistently evaluated so that data is accurate for all analysis and decision-making needs.